Talk to AI characters and get fun answers, ask them to do stuff and … presto!

Characters manipulate objects and interact with each other in settings like a house, an airport, or an Oil Rig. Their actions are controlled using English scripts and English commands.

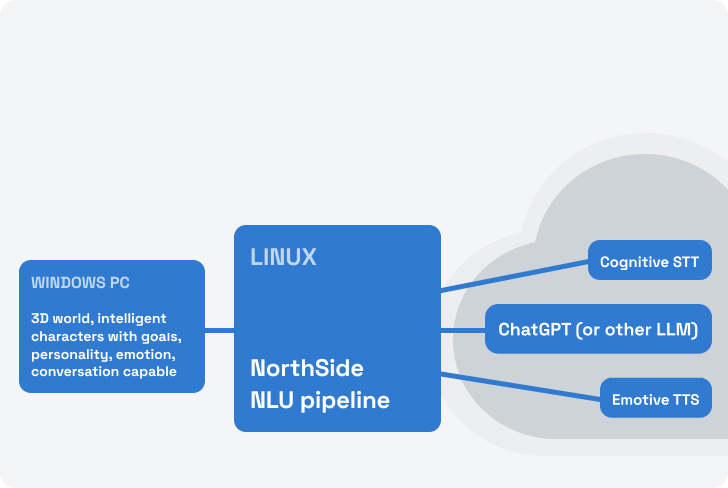

North Side develops a Natural Language Understanding (NLU) pipeline that leverages LLMs, TTS and STT to enable immersive experiences in games and VR.

North Side develops software to leverage generative-AI in entertainment

It is not easy to use Generative-AI out of the box to create immersive experiences.

- We have developed a paradigm ( and a NLU pipeline) to leverage generative-AI in videogames and immersive VR experiences.

- Our pipeline reacts to real-time events with lower latency and supports interaction with the 3d environment.

- We have developed the kind of monetization required to deploy videogames and VR experiences powered by generative AI (LLMs, emotive speech, speech-to-text)

- We are developing tools (Perla) for other companies and to make videogames using generative AI.

We’re North Side —

The developers of Bot Colony

North Side develops Natural Language Understanding (NLU) technology that integrates with LLMs and proprietary tools to enable immersive experiences in games, VR, and the metaverse.

Using only English and point-and-click (no programming!), our users will be able to animate intelligent, conversation-capable 3D characters with personality, emotion, and the ability to interact with their 3D environment and with each other.

Our core tool, Perla, makes this possible by enabling dynamic, unscripted interactions without requiring complex AI pipelines or extensive training.

Bot Colony _REDUX is launching Q4 2025

Our Bot Colony prototype, the first videogame to use language as the main gameplay mechanic, was launched in Early Access on June 2014.

Recent advances in AI (Large Language Models), Speech-To-Text, emotive Text-To-Speech and visual realism (Unreal 5.6) make it possible now to deliver the kind of experience we envisioned for our game. Stay tuned for the next Playtest round on Steam, and a launch on Early Access in Q4.